Dall-E’s AI-Generated Pictures: the Key to Both Thrilling and Scaring Media Realities

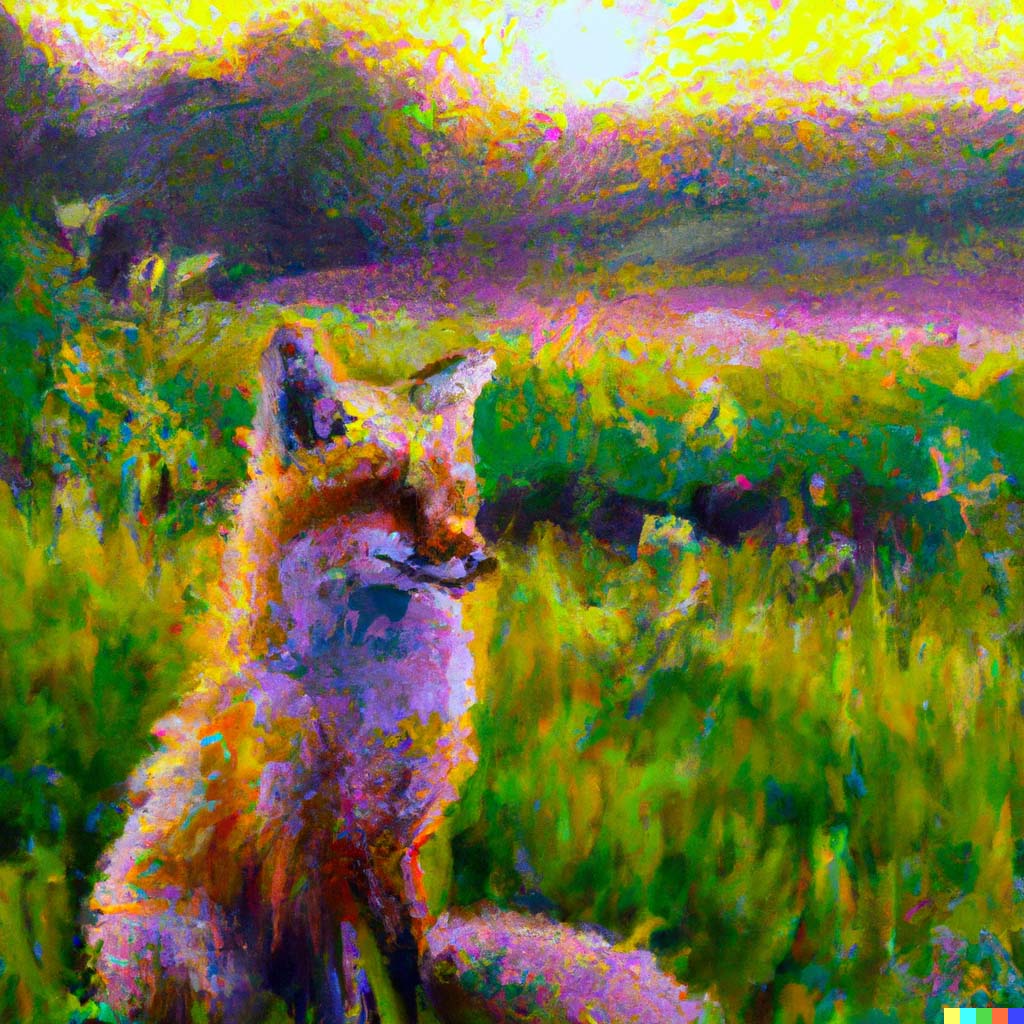

The modern computational creative powers show that robots can become acknowledged visual artists. For instance, Dall-E — this originative and constructive software paints everything you imagine. The fascinating fact is that every user can visualize their beliefs, desires, and daydreams. And it all sounds inspiring and innocuous until we face another side of creativity. So, can AI-driven artworks bring dangers and detriments?

Artistic AI’s Purpose

It is too early to suppose that tools like Dall-E, ArtBreeder, and other AI-fueled visual instruments will replace artists. Such tools’ first and foremost purpose is to fill the gaps of instant informational demands. For example, Dall-E can “draw” everything from analytical article illustrations to cute anime fan art. We cannot equalize those pictures to human art. But those pieces suffice to decorate solid texts or entertain users who adore some media products.

The Principles of Functioning

But also, there is this power of visualizing everything that pops into the user’s mind. Moreover, Dall-E is a unique project, as it pictures one’s perception based on text captions. Thus, the program comprehends the essence of words. It sees the connections between objects. For instance, type “a lady in a forest,” — and you get ten pictures of a woman standing in photorealistic woods. Then try typing “a lady sitting on the grass in the forest”. The AI will understand that the character must be connected to the object.

That is where Dall-E wins over tools like ArtBreeder. The latter is a convenient instrument too, but it is all about toggling buttons to create a picture of limited variations. With Dall-E, your ideas have more specific and accurate implementation.

The Biases Issue

Of course, this AI learns from human culture. So, it orients on the most popular visions and beliefs instead of picturing objective reality. Henceforth, AI tends to follow stereotypical visions. And it is no secret that we still have the issue of bias.

Let us exemplify. A user might need a picture of a CEO specialist. There are people of any gender in the industry, but the AI will solely give you photo series with males. Female characters will either be in the background or, overall, non-existent. And that is when we must recall that we are playing with the masses’ reality reflection.

The same thing is not only with the gender topic. Nationalities, body types, styles — those are categories the AI will show bias to in parallel. Sure, a user may add keywords to alter the image. But still, the equilibrium is missing. And it is not the AI’s fault.

Improper and Violent Content

A tech-enthusiast, Alex Kantrowitz, tested the AI’s ability to empower ideas with destructive intentions. The specialist chose a political topic and asked the AI to draw Donald Trump and Joe Biden in a violent context. Keywords like “impaling” and “blood-covered” got blocked immediately. Thus, there are filters to ban users from implementing aggressive projects. For example, those pictures depicting murder and mutilation. Yet note that such an obstacle is actually only because the developers enable it. Still, there might be ways to deceive the system and bypass the terms of service.

The Verdict

AI objectively is a powerful tool for artistic creation and visualization. Yet, there might be unpleasant results, regardless if users store or publish offensive content. So, discretion is advisable.