Best Proxies for Scraping and Crawling

Web scraping and crawling are essential components of modern marketing. However, websites often deny access to data collectors, so constantly changing IP addresses is necessary. To ensure uninterrupted data collection, web scraping relies on proxies. Yet, with so many different proxy providers available out there, finding the best one can take time and effort. To help you out, we've researched the most popular proxy services and identified those best suited for data extraction.

Overview

- Residential Proxies

- Mobile Proxies

-

+2 more

TypeResidential Proxies Mobile Proxies ISP Proxies Datacenter: IPv4 & IPv6

- Germany

- France

-

+18 more

LocationsGermany France Netherlands Spain Brazil India Turkey Canada Japan South Korea Sweden Poland Malaysia Indonesia Australia China Taiwan USA England and 210+ more

- 4,7 Users feedback

- 4,6 Experts evaluation

- 4,8 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- Proxy types for different use cases

- 99% uptime

- High connection speed

- Loyal customer support

- Mobile proxy setup and management

- Lack of free trial

- Mobile Proxies

- Russia

- Germany

-

+26 more

LocationsRussia Germany France Netherlands Spain India UK Czech Republic Lithuania Portugal Ukraine Denmark Romania Poland Indonesia Australia Thailand Israel Belarus Bulgaria Georgia Kazakhstan Latvia Moldova USA Armenia Estonia Kyrgyzstan

- 4,7 Users feedback

- 4,1 Experts evaluation

- 4,5 Proxybros rank

- SOCKS5

- HTTP

- Private channel

- Unlimited bandwidth and traffic

- Multi-threading

- HTTP and SOCKS5 support

- Fairly quick speeds of 3-30Мb

- IP change by link (API) or timer

- Limited proxy types and locations

- Relatively high prices (yet 100% match the quality)

- Residential Proxies

- Static Residential Proxies

-

+6 more

TypeResidential Proxies Static Residential Proxies SOCKS5 Proxies Mobile Proxies Rotating ISP Proxies ISP Proxies HTTPS Proxies Dedicated Private Proxies

- Russia

- Germany

-

+22 more

LocationsRussia Germany France Italy Netherlands Spain US Brazil India Mexico Turkey UK Canada Japan Belgium Czech Republic Lithuania Portugal Slovenia Ukraine Hungary Romania Greece Norway

- 4,2 Users feedback

- 4 Experts evaluation

- 4,5 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- Unlimited concurrent sessions

- Dedicated account manager

- Chrome extension

- Web unlocker

- Data Collector and Data Collector IDE

- Expensive proxies

- Residential Proxies

- Mobile Proxies

-

+1 more

TypeResidential Proxies Mobile Proxies Corporate proxies

- 190+

- 4,8 Users feedback

- 4,1 Experts evaluation

- 4,6 Proxybros rank

- SOCKS5

- HTTP

- Worldwide coverage

- API support

- 7-day free trial

- Free and very flexible geo-targeting

- Username and password authentication

- IP whitelist

- Limited payment options

- Residential (mobile and Wi-Fi)

- Datacenter (in some countries)

-

+1 more

TypeResidential (mobile and Wi-Fi) Datacenter (in some countries) US ISP

- All

- 4,7 Users feedback

- 4,2 Experts evaluation

- 4,5 Proxybros rank

- SOCKS5

- HTTP

- UDP (for Residential)

- Huge IP pool across the globe

- Unlimited connections (ports)

- Email OTP authentication

- UDP protocol support

- Compatibility with multiple anti-detect browsers

- 24/7 human support

- Partnering with the WEDF to ensure full compliance with industry regulations.

- No app or browser extension

- No free trial, although 100 MB costs only $1.99 for 3 days

- Datacenter Proxies

- Residential Proxies

-

+1 more

TypeDatacenter Proxies Residential Proxies Static Proxies

- 220+

- 4,5 Users feedback

- 4,1 Experts evaluation

- 4,1 Proxybros rank

- SOCKS4

- SOCKS5

- HTTP

- HTTPS

- UDP

- UDP protocol support

- High anonymity

- Large IP pool and wide geo-coverage

- Unlimited concurrent sessions

- Rotating/sticky sessions

- Free geo-targeting (by country, state, city, and ASN)

- Good reputation

- Limited proxy types

- Lack of extra tools

- Static Residential Proxies

- Rotating Residential Proxies

-

+1 more

TypeStatic Residential Proxies Rotating Residential Proxies Rotating Mobile Proxies

- UK

- Canada

-

+2 more

LocationsUK Canada USA Random Locations

- 4,8 Users feedback

- 4,1 Experts evaluation

- 4,2 Proxybros rank

- HTTP

- HTTPS

- High-quality private proxies

- Good proxies for Instagram

- Fast 4G mobile proxies

- Rotating proxies come with a 60-minute static session

- Static residential IP servers keep the same IP for a minimum of 30 days

- Anonymous undetectable servers

- Expensive pricing plans

- Inappropriate proxies for games

- Limited targeting

- Residential Proxies

- Static Residential Proxies

-

+3 more

TypeResidential Proxies Static Residential Proxies Rotating ISP Proxies Static datacenter Unlimited residential proxies

- Europe

- Africa

-

+5 more

LocationsEurope Africa Antarctica Asia North America Oceania South America

- 4,6 Users feedback

- 4,5 Experts evaluation

- 4,6 Proxybros rank

- 99.9% success rate

- Solid geo coverage

- Precise targeting options

- A massive IP pool

- Unlimited concurrent sessions

- Support for HTTP, HTTPS, and SOCKS5

- Encrypted proxy connection

- A user-friendly dashboard platform

- Competitive pricing

- Easy integration

- Post-purchase assistance

- No mobile proxies

- No refund policy in place

- Dedicated 4G/5G Modems (Mobile Proxies)

- Russia

- Germany

-

+18 more

LocationsRussia Germany France Italy Netherlands Spain US Brazil India UK Canada Czech Republic Lithuania Poland Ireland Belarus Georgia Kazakhstan Slovakia EU

- 4 Users feedback

- 4,1 Experts evaluation

- 4 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- A large number of networks and subnets

- Competitive prices

- Works with all types of websites/apps

- A bit too expensive mobile proxies

- No residential proxies

- Network issues from time to time

- Residential Proxies

- Mobile Proxies

-

+2 more

TypeResidential Proxies Mobile Proxies Rotating Residential Proxies Sticky Proxies

- Germany

- France

-

+12 more

LocationsGermany France Italy Netherlands Spain US India UK Canada Belgium Malaysia Indonesia Thailand Vietnam

- 4,9 Users feedback

- 4,6 Experts evaluation

- 4,7 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- A massive pool with 30+ million IPs

- No limits on IP rotation

- 4G/5G high-stealth mobile proxies

- HTTPS and SOCKS5 protocols supported

- 98.6% request success rate

- It is affordable and offers many flexible plans

- There are no separate services for gamers

- In the Micro plan, additional GBs are relatively expensive: $5/GB

- Residential Proxies

- Rotating Residential Proxies

-

+1 more

TypeResidential Proxies Rotating Residential Proxies Sticky Proxies

- Germany

- France

-

+13 more

LocationsGermany France Italy Netherlands Spain US India UK Canada Belgium Malaysia Indonesia Thailand Vietnam 150+ countries, 1400+ cities

- 4,2 Users feedback

- 4,4 Experts evaluation

- 4,7 Proxybros rank

- SOCKS5

- HTTP

- The dedicated quality-first approach

- Record-beating sticky sessions

- City-level targeting in 150+ countries

- Unmatched customer support

- Rolling over the unused bandwidth

- Limited proxy types

- No free tools or add-ons

allows +2GB when purchasing any package, except trialsCoupon code:BROS NJGH854NGHKL

- Static Residential Proxies

- Rotating Residential Proxies

-

+3 more

TypeStatic Residential Proxies Rotating Residential Proxies Rotating Mobile Proxies Dedicated Mobile Proxies Rotating Datacenter Proxies

- All countries (170+)

- 4,5 Users feedback

- 4,7 Experts evaluation

- 4,7 Proxybros rank

- SOCKS5

- HTTP

- 170+ locations

- 25 million IP addresses

- High-quality scraping tools

- Fast mobile proxy servers

- Sticky session up to 1 hour

- Affordable pricing plans

- Inappropriate for gaming

- No free trial

- Anonymous

- Datacenter Proxies

-

+9 more

TypeAnonymous Datacenter Proxies Residential Proxies SOCKS5 Proxies Rotating ISP Proxies Search Engine Proxies Shared Proxies HTTP Proxy Sneaker Proxies Backconnect Proxies Dedicated Rotating Proxies

- Germany

- France

-

+20 more

LocationsGermany France Italy Netherlands Spain US Brazil India Mexico UK Canada Japan Belgium Czech Republic Lithuania Portugal Slovenia Ukraine Denmark Hungary Romania Greece

- 4,7 Users feedback

- 4,5 Experts evaluation

- 4,5 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- Various pricing options

- Unlimited threads

- Versatile scraping APIs

- 99.99% uptime

- 3-day money-back guarantee

- 24/7 support

- Overall expensive pricing

- Datacenter Proxies

- Residential Proxies

-

+3 more

TypeDatacenter Proxies Residential Proxies Static Residential Proxies Mobile Proxies Rotating ISP Proxies

- 195+

- 4,7 Users feedback

- 4,5 Experts evaluation

- 4,5 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- 24/7 live customer support

- HTTP, HTTPS, SOCKS5 protocols supported

- A pool of IPs: 100m+ IPs around the world

- Dedicated Account Manager

- Reliable proxy resources

- Limited pricing options

- Datacenter Proxies

- Residential Proxies

-

+3 more

TypeDatacenter Proxies Residential Proxies Mobile Proxies ISP Proxies Enterprise Proxies

- Germany

- France

-

+43 more

LocationsGermany France Italy Netherlands Spain Albania Algeria Andorra US Brazil India Mexico Argrentina Turkey UK Canada Japan Belgium Czech Republic Lithuania Portugal Denmark Hungary Romania Greece South Korea Sweden Finland Switzerland Norway Poland Cyprus Malaysia Singapore Indonesia Australia China Egypt Philippines South Africa Thailand Vietnam Ireland New Zealand 195 countries worldwide

- 4,4 Users feedback

- 3,8 Experts evaluation

- 4,1 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- 100% genuine, ethical residential proxies

- Great customer support

- A large proxy pool

- No free trial

- Datacenter Proxies

- Mobile Proxies

-

+2 more

TypeDatacenter Proxies Mobile Proxies Rotating Residential Proxies Static ISP proxies

- Germany

- France

-

+26 more

LocationsGermany France Italy Netherlands Spain US Brazil India UK Canada Japan Belgium Lithuania Portugal Slovenia Ukraine Denmark Hungary Greece Sweden Finland Switzerland Norway Poland Cyprus Malaysia Singapore Indonesia

- 4,8 Users feedback

- 4,7 Experts evaluation

- 4,9 Proxybros rank

- HTTP

- HTTPS

- A huge proxy pool (52M+ residential IPs);

- The service uses DiviNetworks, enhancing stability and speed;

- Global coverage and advanced geo-targeting;

- A variety of subscription plans;

- 7-day free trial for certified companies;

- Micro-testing plans for individual users.

- Limited bandwidth;

- No SOCKS5 support.

- Datacenter Proxies

- Private Proxies

- Russia

- Germany

-

+40 more

LocationsRussia Germany France Italy Netherlands Spain US Brazil India Mexico Turkey UK Canada Japan Belgium Czech Republic Lithuania Portugal Sweden Finland Switzerland Norway Poland Cyprus Indonesia Australia China South Africa Ireland Austria Bangladesh Belarus Bulgaria Georgia Kazakhstan Korea Latvia Malta Moldova Nigeria Serbia Slovakia

- 4,1 Users feedback

- 4,2 Experts evaluation

- 3,9 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- An extensive list of free network tools

- Affordable prices

- IPv6 support

- Affiliate Program

- No city-level targeting

- No residential proxies

- Limited UI language support

- Static Residential Proxies

- Sneaker Proxies

-

+2 more

TypeStatic Residential Proxies Sneaker Proxies Rotating Residential Proxies Cloud Hosting

- Germany

- France

-

+19 more

LocationsGermany France Italy Netherlands Spain US Brazil India UK Canada Belgium Lithuania Portugal Denmark Hungary Finland Switzerland Norway Poland Cyprus Singapore

- 3,8 Users feedback

- 3,2 Experts evaluation

- 3,5 Proxybros rank

- SOCKS4

- SOCKS5

- HTTP

- HTTPS

- One of the largest pools and location coverage

- Tier-1 bandwidth

- Good pricing

- 3 days money-back guarantee

- Sufficient scrapping performance for some sites

- High proxy speed

- Connection errors

- Limited session control

- Residential Proxies

- Static Residential Proxies

-

+3 more

TypeResidential Proxies Static Residential Proxies Mobile VPN Desktop VPN Private Proxies

- Russia

- Germany

-

+22 more

LocationsRussia Germany France Italy Netherlands Spain US Brazil India Mexico UK Canada Japan Belgium Lithuania Slovenia Ukraine Denmark Hungary Romania Greece South Korea Sweden Finland

- 3,7 Users feedback

- 3,5 Experts evaluation

- 3,2 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- Geo-targeting

- High rated customer support

- Good interface

- Fast connection

- Web crawlers operation

- High prices

- No mobile IPs

- Anonymous

- Datacenter Proxies

-

+2 more

TypeAnonymous Datacenter Proxies Mobile Proxies ISP Proxies

- Russia

- Germany

-

+21 more

LocationsRussia Germany France Italy Netherlands Spain US Brazil India Mexico UK Canada Japan Belgium Czech Republic Lithuania Portugal Slovenia Ukraine Denmark Hungary Romania Greece

- 4 Users feedback

- 3,5 Experts evaluation

- 4,1 Proxybros rank

- SOCKS5

- HTTP

- HTTPS

- Customizable pricing plans

- Ethically sourced proxies

- Flexible proxy service

- Locations diversity

- Unlimited treads

- Specialization in datacenter proxies

Proxies for Scraping and Crawling: How Do They Work?

The terms ‘web crawling’ and ‘web scraping’ are often used interchangeably to describe the process of mass searching for data. But is it the correct approach? Not really. So let’s clarify what the difference lies between the two processes.

Web scraping means searching the web to find the necessary information on some sites. Simply put, it always tries to collect as much information as possible.

But what is web crawling, then? It is a more specific process used when you want to find the data and extract it or, in other words, download it. Web crawling and web scraping are not isolated and, as a rule, are parts of the same process.

A proxy for web scraping or crawling is an intermediary that routes your traffic through itself and replaces your IP address with its own. When you send a site request via an intermediary, the site does not see your IP. It only sees the proxy server’s IP address, allowing you to browse (or parse) web pages anonymously. These proxies are used to:

- Bypass blocking. If your IP has been blocked due to suspicious activity like spamming, the web scraping proxy will give you access to blocked content.

- Access localized data. The majority of websites put limits on locations allowed to visit the website.

- Avoid excessive requests. Each website can limit the maximum number of requests issued by a particular IP address. So, if you exceed the website’s rate, your IP will be blocked. Hence, you must frequently change your IP – scraping proxies provide this opportunity.

How to Choose Proxies for Crawling and Scraping

A great variety of server providers offer proxies for web scraping or crawling. Anyway, it’s still quite complicated to choose the most reliable platform with the most effective services. How to get proxies for parsing that will please with stable performance and lack of malfunctions?

- First, study the range of companies that provide proxies for scraping purposes. Identify the top 15 variants for your needs and explore their features. Pay much attention to pricing options as well as the proxy pool available.

- If you find these platforms appropriate, read expert reviews. They will help you to study the service in more detail, saving time and money on your investigation.

- Finally, look through user reviews on TrustPilot, SiteJabber, and Reddit. They will reflect how things really are. Here you will get a detailed response on all platform failures if they have a place to be.

Before you search for top web proxies, get acquainted with our overview of the best 15 proxy services that suit parsing.

Strengths and Weaknesses of Scraping Proxies

| Strengths | Weaknesses |

| ✅ Hide your IP, current location, and data ✅ Increase your security online ✅ Give access to localized content ✅ Help to avoid excessive requests | ⛔ Do not encrypt data ⛔ Advanced detection methods can still identify your location ⛔Your traffic passes through a proxy server |

Top Scraping Proxies in 2025

These are the best proxies for parsing that have earned universal acceptance. Each can boast a large pool of IPs, stable performance, and a good reputation. Let’s focus on each platform in detail.

1. Proxy-Seller — Best for Web Scraping Limitless Potential

Proxy-Seller offers some outstanding features, making it a top pick for starters seeking to uncover everything scraping proxies are capable of. Its main benefit is nearly limitless web scraping capabilities. This functionality is achieved due to the lack of ISP proxies restrictions. Besides, Proxy-Seller is notable for a mind-blowing speed of 1GB/s which would make data collection as swift and convenient as ever before.

In addition, the proxy provides the opportunity to connect to IPs in 50 countries. It may not be the highest number you encountered, but when combined with immense speed is quite enough for seamless web scraping. To ensure the best user experience, Proxy-Seller also offers several helpful tools:

- Proxy Checker. The option allows checking the performance of a proxy server and evaluating the server type and anonymity level.

- Port Scanner. It scans any open ports for security and determines malware, if any. It will secure your PC and protect you against data leakages.

- Ping-IP. This tool will allow you to define the exact time needed to transfer data from one device to another.

- IP-Trace. With this tool, you will discover data on all the halfway routers placed on the way to the end node.

All the tools described are free and available for testing on the Proxy-Seller site.

Key Features:

- Convenient choice of 800 subnets and 300 networks

- High-quality protection and anonymity ensured by dedicated Socks5 & HTTPS proxies

- 24/7/365 technical support

- 24-hour trial period and a full refund or package substitution if needed

Best suited for speedy web scraping.

2. Asocks — Best Price-Quality Ratio

Asocks has a massive pool of over 9 million residential and mobile proxy IP addresses. With more than 190 GEOs on hand, users can enjoy a stable connection that allows them to perform any kind of crawling, scraping, and parsing tasks.

The service supports integrations with anti-detect browsers and other tools for seamless web scraping and allows targeting by ASN numbers.

Asocks can boast one of the lowest prices for residential proxies on the market: $3 per 1 GB. In fact, this is a universal price for all the proxy provider’s solutions including static and rotating residential IPs as well as mobile proxies. And you can enjoy a free trial of 3GB before you start paying.

Key features:

- Over 9 million trusted IPs

- Free trial for the first 3GB

- 190+ locations coverage

- 24/7 support

- $3 per 1 GB for all proxy types

Best suited for global data collection.

3. Bright Data – Best for Collecting Data

Ranking ninth in our list, Bright Data is still one of the best proxies for data gathering. It is the category leader #1 in web data gathering, but we can’t say it is the best service in this niche. Anyhow, we should pay tribute to Bright Data here because it prioritizes data collection and provides one of the richest proxy pools of over 72 million addresses. These are stable proxies for web scraping that operate without interruptions. We can’t say Bright Data words flawlessly, but it excels in data gathering. What about tools provided for data extraction?

Among them are:

- Datasets. These are units of information for every business need. You can search data in different categories: social media, e-commerce, business, and even directories.

- Data Collector. It allows one to scrape data at scale with zero infrastructure. So, you do not need to write any codes to scrape data. Besides, it helps overcome obstacles to desired content and gain business partners.

- SERP API. It is another proxy scraper allowing structured data in HTML or JSON from all major search engines.

- Web scraper IDE. It is a unique solution designed for developers to reduce their development time and allow them to scale without limits.

- Scraping browser. This new feature by Bright Data is touted as the world’s only automated browser with any web crawling and scraping tools in one place.

- Web unlocker. This tool allows overcoming any website blocks with ease.

Key features:

- A pool of over 72 million IPs

- Advanced data gathering tool

- ASN, city, country, and career targeting

- Worldwide geo-distributions

Best suited for collecting data.

4. MobileProxy— Best Private Proxies for Multiple Accounts

Anyone with at least some experience in web scraping and crawling knows that it is super important that the proxies you use for it are clean and whitelisted. And this is precisely what MobileProxy offers. Their IPs are sourced from real mobile operators in 30 countries worldwide. The chances of a ban for such proxies are close to zero.

Another significant plus is that the user has complete control over their proxies for web scraping. For example, they can independently change…

- IP by API

- IP by timer

- GEO

- Mobile operator.

Needless to say, this autonomy is beneficial for both scraping and crawling. Plus, MobileProxy provides various free tools to enhance the proxies’ performance even further. Those include a free proxy checker, an Internet speed test, an anti-hacking interface, and more.

Every tariff at MobileProxy includes unlimited traffic, a private channel, multithreading, and HTTP and SOCKS5 support. You can use a two-hour free trial to see how it all works. All they need for that is to complete a super fast registration, and they are good to go.

Key Features:

- A large pool of almost 2 million IPs from real mobile operators

- Change IPs by link or timer

- Independently change GEO or mobile operator

- Lots of discounts to new users plus promos from partner services

Best suited for multi-accounting.

5. MangoProxy – Best for an Extensive Range of Locations

If you need unrestricted access to web resources worldwide, you should give MangoProxies a try. From what I’ve seen, this provider is probably the leader by the IP pool provided – 90M addresses across the globe! All sourced from reliable sources, these proxies for web scraping guarantee anonymity and mirror genuine user behavior. Thus, the risk of getting blocked is eliminated.

Unlike some other services I tried, MangoProxy won’t charge you extra for concurrent sessions. So, you can connect as many accounts as you want simultaneously. Perfect for web scraping projects at scale!

As for geo-targeting, the precision is top-notch. I liked that I could target by city, state, country, or ASN. The number of countries covered exceeds 220 so far.

Web scraping and crawling proxies from MangoProxy support connection via HTTP, HTTPS, and SOCKS. The uptime? A whopping 99.99%! The speed of connection never disappointed me. The stated response time is 0.7 seconds – from my experience, that seems to be true.

The cost of MangoProxy’s services will depend on the traffic used, starting from $8 per GB. The more traffic in the plan, the cheaper the solution will ultimately be. Unfortunately, there’s no free trial as such. However, new customers can order the first 1 GB of data to test scraping and crawling proxies for just $1.7.

Key Features:

- 220 countries covered

- 90 million IPs from real users

- Average response time of ~0.7s

- Uptime of 99.99%

- Unrestricted concurrent sessions

Best suited for simultaneous connections.

6. SOAX — Best for Gathering Highly-Localized Data

When you need a proxy for scraping, and the very precise GEO-location matters, SOAX proxies come to the scene. It offers a huge proxy pool: over 191 million IPs, and also suggests tools that minimize the risks of bans. By buying SOAX residential or mobile proxies for scraping, or their US ISP IPs, you can target particular cities or ISP. This way, you can easily conduct local market analysis, monitor competitors, do local SEO optimization, track local events — and many more.

Besides, SOAX has additional scraping solutions. I’m talking access to a big ethically sourced scraping proxy pool – you can purchase AI, SERP, eCommerce, and social media APIs.

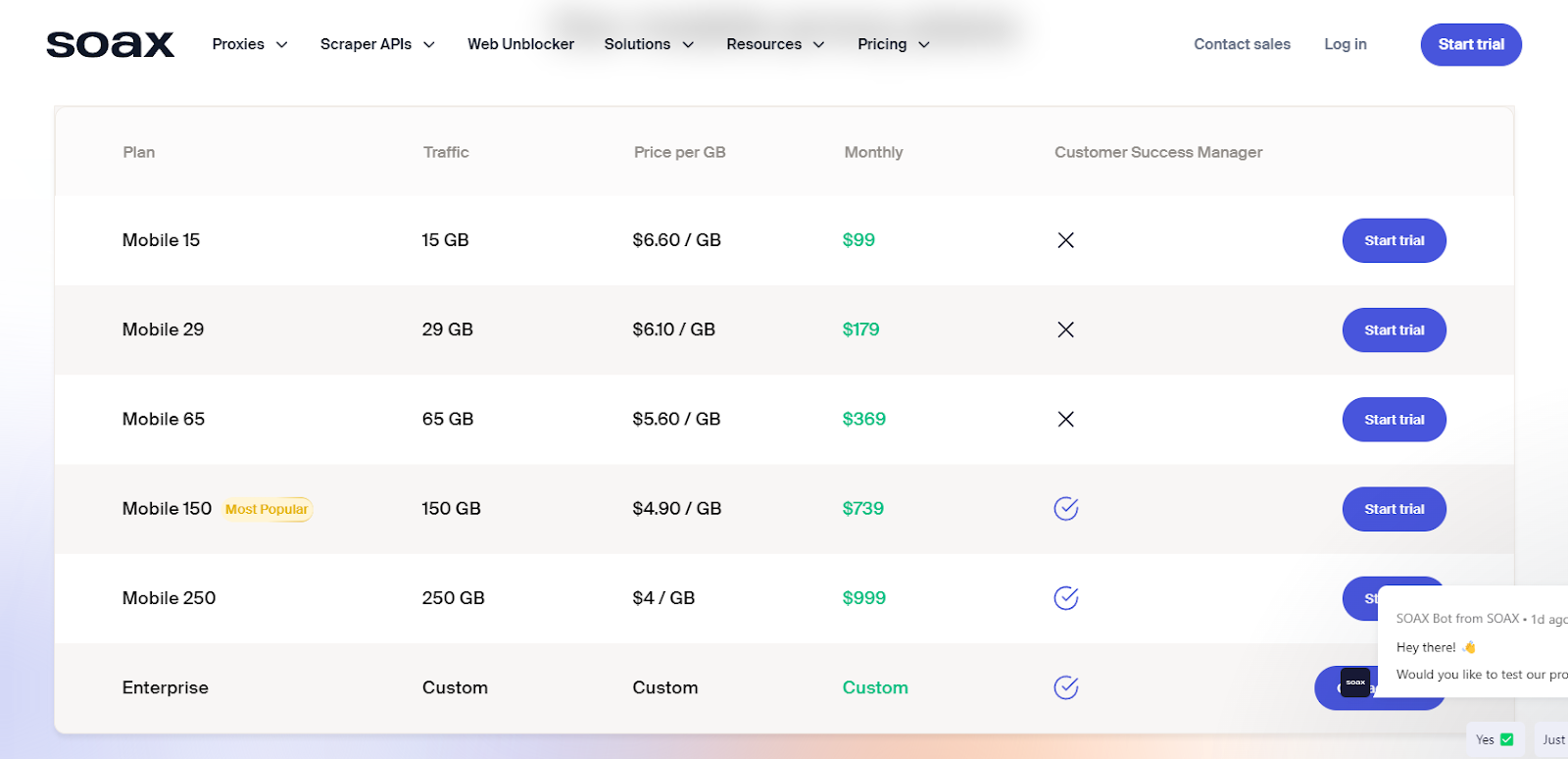

Pricing plans are the same for US ISP, residential, and mobile proxies (while datacenter ones will cost you $0,50/GB):

Prices for SOAX scraping proxies start from $4/GB.

Key features:

- Special tools for customizing proxies

- Ready scrapers

- A pool of 191 mln IPs

- Dedicated account managers

- A success rate of 99,55%

Best suited for marketers and large-scale businesses.

7. Live Proxies — Best for Scraping Data from South American and UK Resources

Need to scrape data from restricted resources in South America or the United Kingdom? Live Proxies is a provider exactly for that! Their IP pool consists of 10M+ clean addresses in the US, Canada, and the UK. Those are real user devices’ IPs assigned by Internet providers and mobile operators in these countries. The provider also offers proxies in some random locations which are different from the ones I mentioned.

These scraping proxies are used by many — marketers, SEO specialists, scientists, financial analysts… The list can go on. I used rotating residential IPs for real estate data gathering. My impressions? I saw high-speed connectivity and consistent uptime. And no obstacles like CAPTCHAs or IP bans down the road.

Once you pay for scraping and crawling proxies, you have them up and running instantly. The configuration process won’t make you sweat either. The whole setup is intuitive, and you can easily tweak your proxy settings from a dashboard.

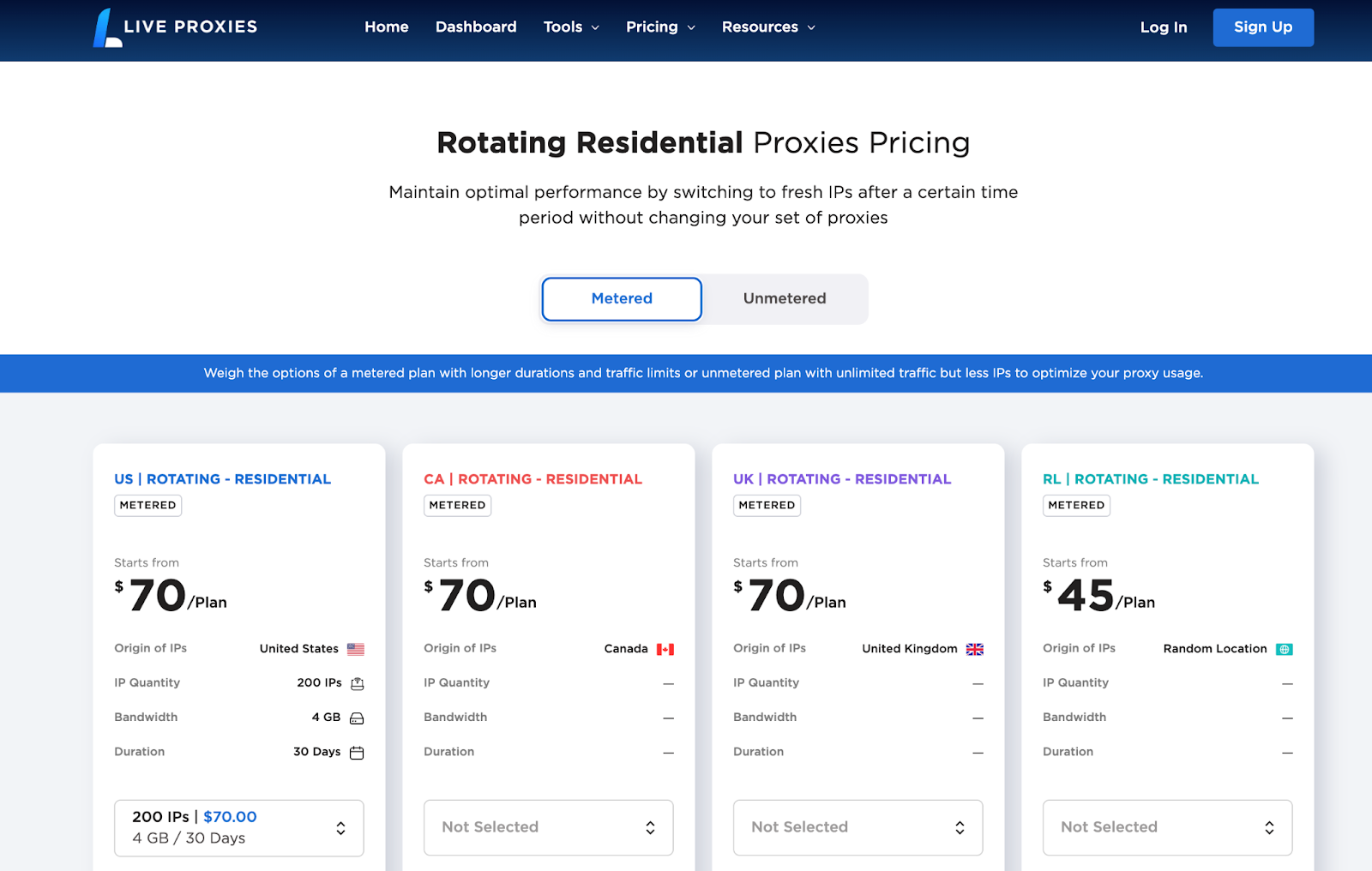

Pricing plans come in two formats — metered and unmetered. Metered packages range from a $70 4GB 30-day plan to a $530 50 GB 90-day plan. Unmetered packages differ in the number of IPs included — from 25 to 100 addresses included; prices vary from $130/month to $330/month.

Key features:

- Highly trusted IPs resistant to restrictions and blocks

- Speedy proxy connection with 0.5s response time and 99.97% uptime

- Both static and rotating scraping proxies, with sticky sessions of up to 60m available for rotating IPs

- Support for HTTP/HTTPS protocols (residential proxies) and 3G/4G standards (mobile proxies)

- No concurrency restrictions

- 100% private proxies (not shared with other clients)

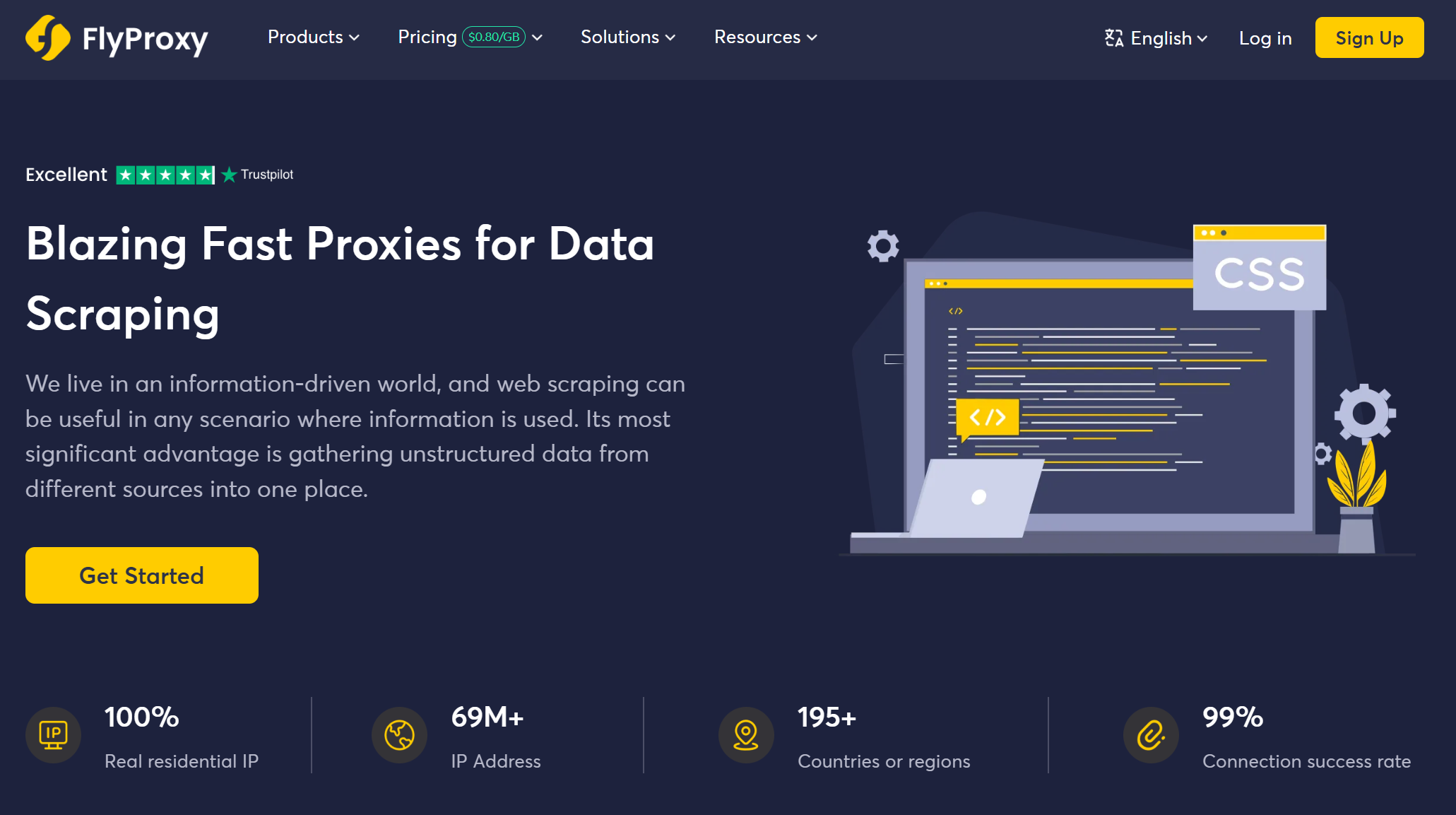

8. FlyProxy – Most Efficient Option of 2025

Crawling and scraping can be tough, but fine folks back at FlyProxy think it really shouldn’t be. They’ve got a huge selection of over 69M IP addresses from 195+ countries. This impressive network makes it super easy to access local content, which can be the biggest difference maker for gathering and analyzing data effectively. Diving into local e-commerce sites? Doing some market research? FlyProxy has you covered, but, best of all, that’s not all they’re great at!

One of the standout features of FlyProxy is its adaptive IP rotation technology. As expected, it makes it super easy for users to switch IP addresses without any hassle. This is important for scraping tasks because it helps prevent too many requests (which may, in my experience, get you perma-blocked on the websites you’re targeting). FlyProxy helps keep your original IP under wraps, so you can extract data without any major interruptions.

The service works with HTTP, HTTPS, and SOCKS5 protocols, so you can scrape data safely and securely. Plus, it provides unlimited bandwidth, so users can handle large scraping projects without stressing over those pesky data limits. This is especially helpful for bigger businesses that go big instead of going home!

FlyProxy offers residential proxies at just $2.50 per GB, making it an affordable option for everyone from small scrapers to larger businesses. Also, you can count on 24/7 customer support, so help is always there if you run into any issues.

To sum it up, FlyProxy offers a huge IP pool, customizable IP rotation, and great pricing, making it a very solid pick for anyone wanting to dive into crawling and scraping, but do it in a smart way. It effortlessly tackles the usual problems that come up during scraping, making it a must-have for anyone working with data.

Key features:

- Very easy-to-use dashboard;

- Unlimited concurrent sessions;

- Easy integration;

- Sessions can last with an IP lifetime of up to 24 hours;

- Big discounts for bigger purchases.

Best for businesses seeking reliable and efficient web data extraction.

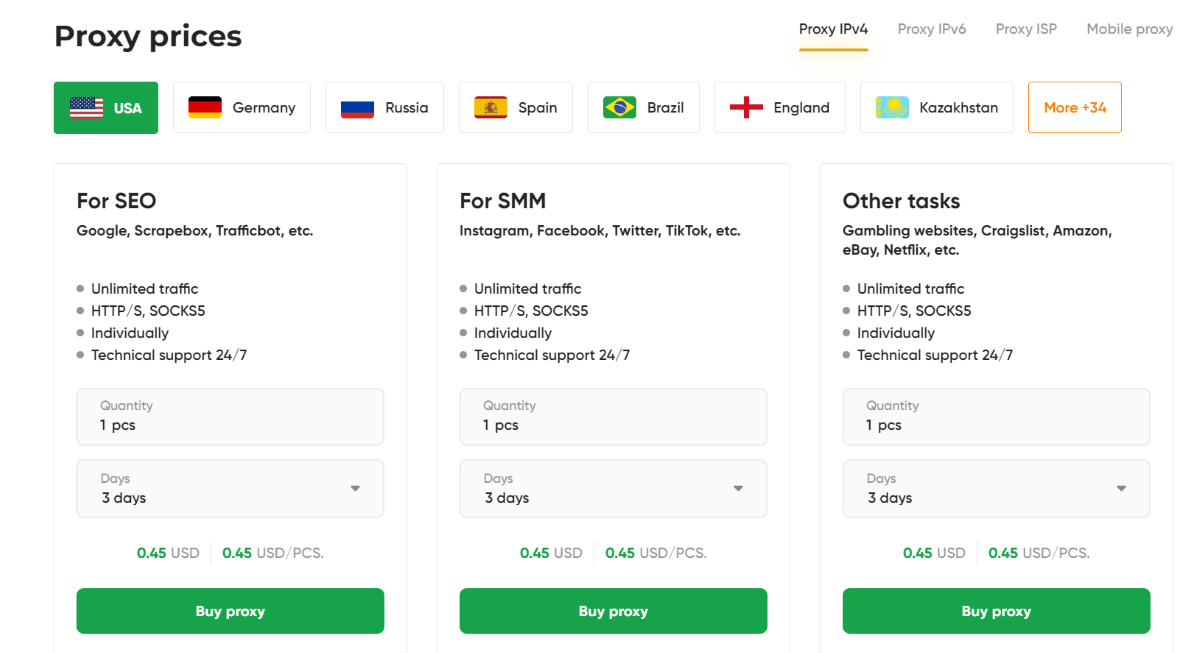

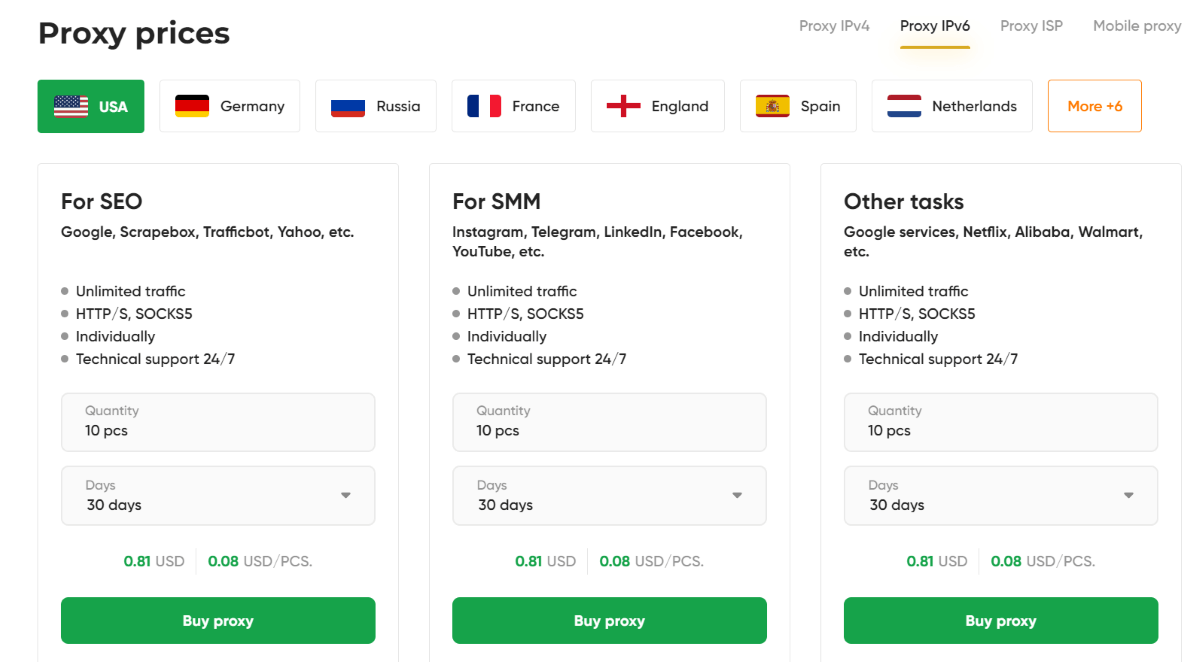

9. Proxy-IPv4.com — Best for Traffic Arbitrage

Affiliate marketers — people who run traffic to various offers and get a commission — constantly require anonymous browsing. Proxy solutions can solve many traffic arbitrage tasks: from crawling competitors’ ad campaigns to scraping social network subscribers.

Sometimes, it’s enough to use a simple IPv4 or IPv6 proxy. It saves money yet allows you to reach high speeds with no limits. It is precisely what Proxy-IPv4.com offers to customers requiring proxies for crawling and scraping: cheap private IPs in 20 countries with minimum ping and unlimited traffic.

To purchase a proxy, select an IP address country and choose the number of packages. If you opt for a mobile proxy, you will have automated IP rotation and won’t need to buy several addresses to rotate them manually.

Prices may vary depending on the GEO and proxy type. Here are some pricing examples:

Key features:

- Unlimited traffic

- No blocklisted proxies

- Geo-targeting

- 24/7 Live Chat

- Helpful customer support reps

- Individual pricing upon request

Best suited for SMM and SEO specialists.

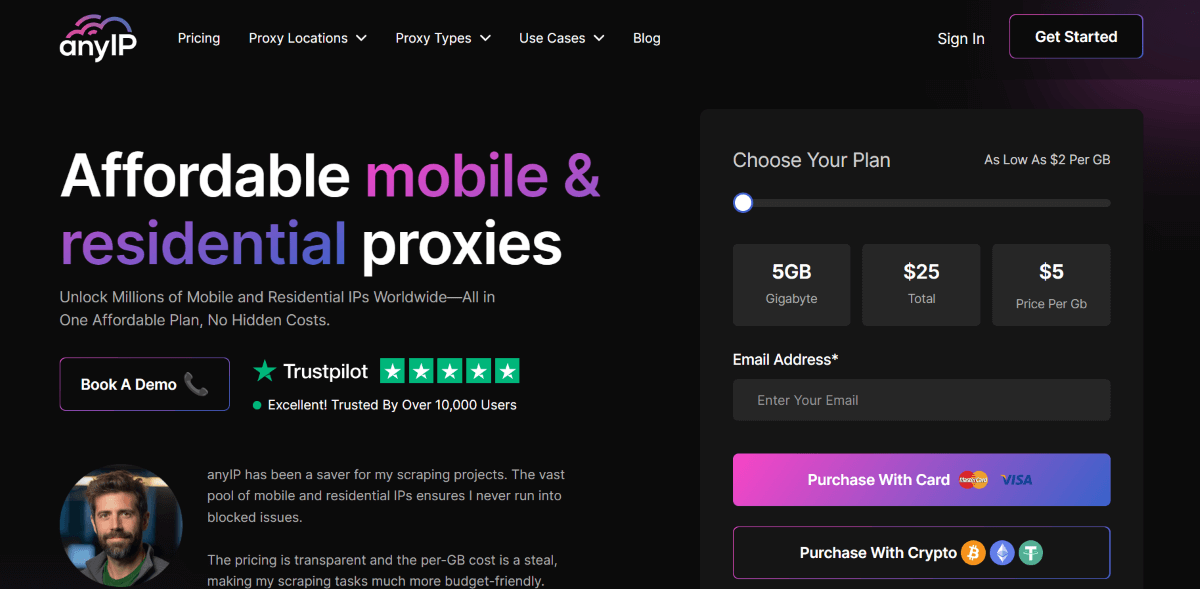

10. AnyIP.io – Best for Overcoming Restrictions

AnyIP.io stands out as a user-friendly proxy provider for scraping and crawling, granting access to a vast array of mobile and residential IPs worldwide. The range of products and services available varies from sneaker bots and SEO scrapers to Instagram automation tools, some of which cost as low as $2/GB. AnyIP proxy service proves invaluable for SEO scraping, data monitoring, sneakers, Instagram, Amazon, Twitter, Reddit automation/scraping, and general security purposes.

If you’re on the lookout for the best proxies for scraping in 2025, AnyIP is a top-tier choice for several compelling reasons:

- It maintains affordability while boasting a global network with over 30 million IPs;

- Supports both HTTP and SOCKS5 protocols;

- Ensures compatibility with all software;

- Achieves an impressive 98.6% request success rate.

And as a cherry on top, it even adheres to a robust “Satisfied or refunded” policy. However, I highly recommend checking out my comprehensive review of all the opportunities AnyIP.io can deliver!

This proxy service for crawling exhibits remarkable service diversity, providing proxies for Telegram, Instagram, Amazon, mobile solutions, and sneaker bots (potentially the best in the industry). These offerings enhance and secure users’ browsing experiences. The proxy service excels in delivering fast speeds, with an average response time of 0.6 seconds, as well as permits unlimited concurrent requests and automatic IP rotation without constraints.

A notable feature is the continuous growth of its IP pool. In the competitive landscape of AnyIP.io vs. its counterparts, it distinguishes itself as more than a reseller by maintaining a unique network of IP addresses.

Key features:

- A vast IP pool comprising over 30 million IPs

- Unrestricted IP rotation capabilities

- High-stealth mobile proxies with 4G/5G technology

Best suited for bypassing robust restrictions.

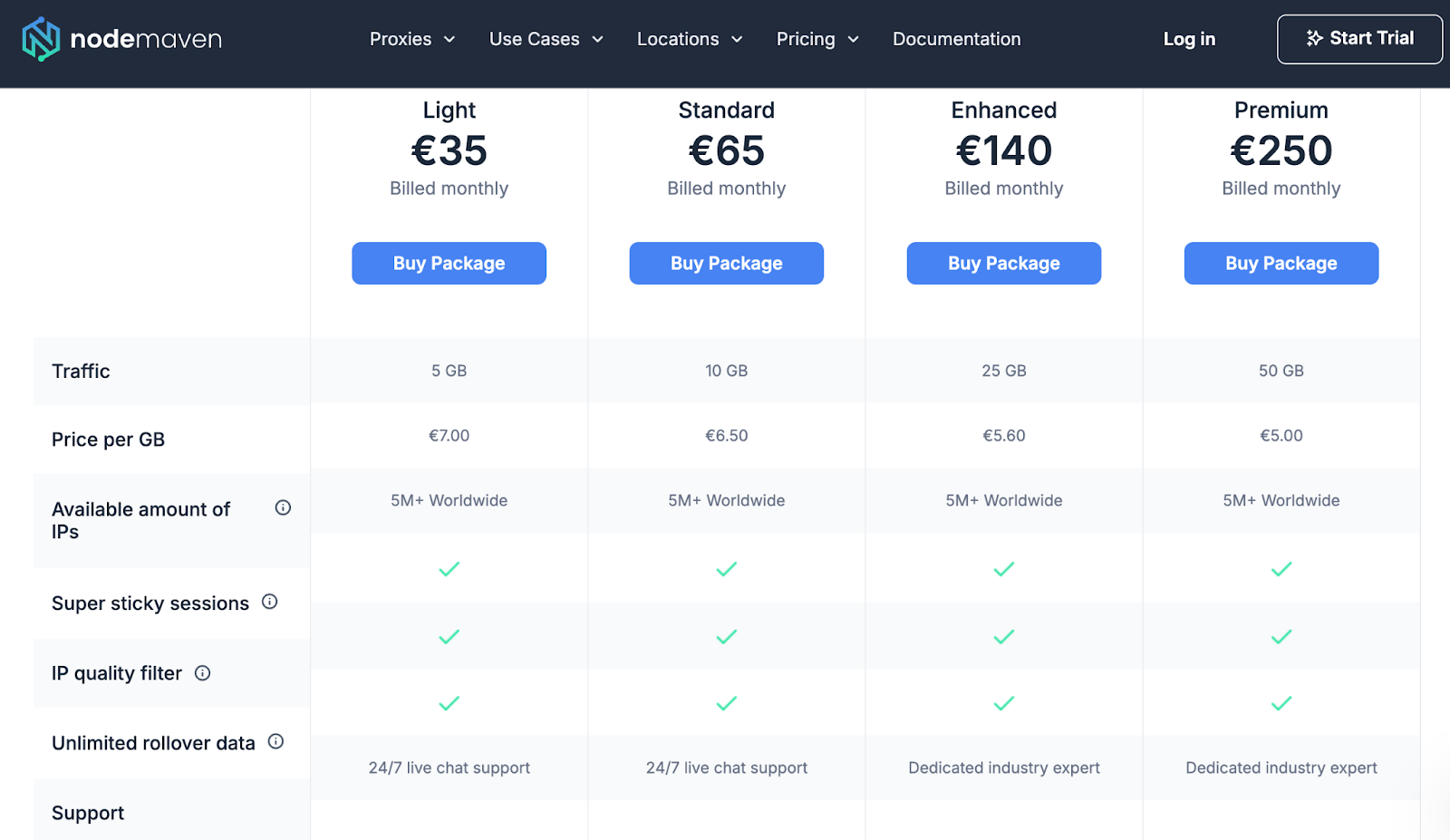

11. NodeMaven—The Cleanest & Top-Tier Residential Proxies

I once tried NodeMaven’s web scraping proxy servers, and I did so for two main reasons. First, my previous IP addresses had shown poor quality when checked by third-party tools. And secondly, even with my past proxies perfectly configured, I still had unexplained problems.

Well, NodeMaven has solved them all. For one thing, they seem to be the first in the crawling proxy market to put the quality of IPs far above their quantity. That, in particular, resulted in over 95% of their IP addresses having a clean reputation.

Also, in terms of proxy servers for scraping, NodeMaven differs significantly due to the following unique features you’d hardly find anywhere else:

- First-class IP filtering, for which they use an advanced algorithm that checks IP addresses in real time before assigning them.

- Sticky sessions here are powered by NodeMaven’s special hybrid proxy technology, which provides IP sessions lasting up to 24 hours, and that, my friend, is well above the market average.

- Expert-level customer support is indispensable for those of you who probably haven’t yet hit a snag with scraping proxies. NodeMaven can indeed offer detailed problem analysis and expert assistance in optimizing your performance.

Key Features:

- Unlimited concurrent sessions

- More than 5 million premium residential IP addresses for web scraping and crawling

- Precise geographical targeting across over 1400 cities in 150 countries

- Trial check – for only €3.99 – which includes 500 MB of traffic to test all features

- When you connect to NodeMaven’s scraping proxy server, you are only allocated an IP address after it has gone through an advanced quality control algorithm.

Best suited for SEO, multi-accounting, and online shopping.

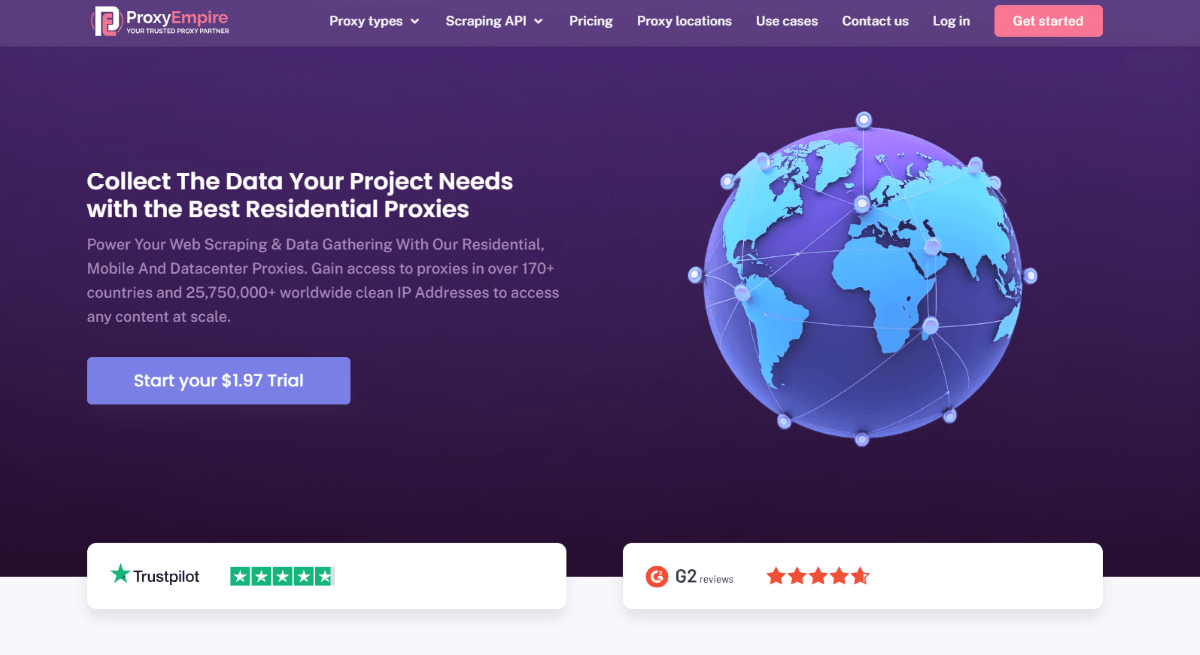

12. ProxyEmpire — Best for Precise Geo-Targeting

ProxyEmpire has become one of the most eminent representatives among scraping proxies. You can find all major proxy types here — residential, datacenter, and mobile servers. Depending on your use case, you can also select the static mode or rotation.

With an extensive pool of 25+ million IPs worldwide, ProxyEmpire becomes a perfect fit for professional scraping and crawling. The service stands out for advanced session control, high connection rate, and excellent uptime of 99,95%.

You can target the majority of proxies presented here by city, state, country, and even ISP, which is rather convenient for crawling purposes. These intermediaries have unlimited bandwidth and concurrent sessions, so your activity won’t be interrupted with undesired blocks.

ProxyEmpire plans to introduce several scraping APIs integrated into the pricing plan you purchase to provide convenient data extraction. Among the tools are:

- SERP Scraping API: This tool facilitates access to search engine data. It enables high-quality market analysis with precise geo-targeting. It allows users to extract data from major search engines like Google, Bing, Baidu, and Yandex. If you need live and reliable data, this tool will help boost localized search.

- eCommerce Scraping API: If you strive to excel your business in the digital marketplace, an eCommerce scraping tool is what you need. It allows crawling data from 60+ famous marketplaces to develop new business strategies with real-time insights and updates.

- Social Media Scraping API: It gives access to data from top social media platforms like Instagram and TikTok. This scraper can easily extract data from profiles, posts, photos, reels, sounds, and videos. It will allow you to make informed decisions and build a working strategy for account promotion.

Key Features:

- Pool of 25+ million IP addresses

- 170 locations worldwide

- Residential, datacenter, and mobile servers

- Qualitative scraping tools integrated into pricing plans

- Static mode and IP rotation

- Excellent integration with other tools

Best suited for crawling.

13. Smartproxy – Best for Scraping Purposes

Smartproxy is the top-ranked proxy provider that has already asserted itself as one of the best solutions to scale business with a fine set of proxy scrapers. Here you can use residential, datacenter, and dedicated DC proxies that count over 50 million addresses with worldwide coverage in over 195 locations.

Although the choice of server types is not so rich, the service compensates for this lack with its outstanding quality and speed. To amend the user experience amid the lack of server types, Smartproxy offers several tools:

- X Browser. This tool juggles multiple accounts while guaranteeing no risk of getting blocked.

- Chrome Extension. It will allow you to bring all essential features of proxies for web scraping into your browser.

- Firefox Add-on. It moves proxies to your favorite browser with a few clicks.

- Address generator. With it, you can generate proxy lists in bulk effortlessly.

Note that all these free tools make Smartproxy stand out among other providers.

What about proxy scrapers offered by this service? It boasts its ready-made solutions to fit any scraping needs:

- SERP Scraping API. This proxy scraper can boast a success rate of about 100%. It is a stack solution for Google and other search engines. SERP Scraping API combines a proxy network, web scraper, and data parser, making it a universal product for business scaling.

- E-Commerce Scraping API. This tool lets you get neatly structured e-commerce data in JSON or HTML. As well as SERP scraper, it combines a proxy network, web scraper, and data parser.

- Web-Scraping API. Parse at scale with this web scraper. All you need is to send a single request and get data in raw HTML from any website you like. It can help you research data from sites of any complexity, including those programmed with JavaScript.

- Social Media Scraping API. This solution allows scraping data from any social media platform, including Twitter, TikTok, or Instagram. It will enable getting well-structured data on images, profiles, soundtracks, etc., while avoiding IP bans or blockages.

- No-Code Scraper. It allows one to schedule tasks and store scraped data without writing codes. Thus, you can parse visually, choose scraping templates, and forget about coding skills.

Key features:

- A pool of over 40 million IPs

- Proxy address generator

- Smart scraper

- Chrome proxy extension

- 3-day money-back guarantee

Best suited for scaling businesses.

14. Oxylabs – Best Overall

Oxylabs continues the list of the best proxies for data extraction. This platform has an extensive pool of over 100 million high-quality residential proxies with unblemished performance. As for the server types presented here, there are various residential (Mobile, Rotating ISP) and datacenter (Shared, Dedicated, SOCKS5, Static) proxies. Oxylabs can be proud of its high speed and absence of failures and interruptions.

Among the special features implemented in Oxylabs is an integrated proxy rotator. What does it mean? It means that the servers you use will rotate automatically or, in other words, change each other without your intervention. Thus, you can achieve a 100% success rate staying anonymous online.

JavaScript rendering is another feature that makes parsing with Oxylabs uncomplicated. It allows extracting data from even the most advanced and complex targets, like JavaScript-heavy targets.

Finally, Oxylabs offers convenient data delivery. It is an enterprise-grade solution that is ready to use straight away. Thus, you can start crawling in minutes and get parsed data delivered to your preferred storage solution.

Oxylabs offers a set of proxy scrapers to ease scraping management:

- SERP Scraper API. It performs a localized search from major search engines, delivers live and reliable data, and is resilient to SERP layout changes. This tool is used mainly for parsing, brand monitoring, and ad tracking.

- E-Commerce Scraper API. With this tool, you can access and crawl the e-commerce product page. It allows one to scrape thousands of e-commerce websites, provides adaptive parsing, and delivers structured data in JSON. As for its specialization, it suits best for pricing intelligence, product catalog mapping, and competitor analysis.

- Real Estate Scraper API. It is an advanced solution for scraping real estate platforms and avoiding blockages. It allows collecting property type, location, pricing, and amenities from all the major sites and extracting it in HTML form.

- Web Scraper API. Here you can receive scalable real-time data from a majority of websites. This tool covers customizable request parameters and JavaScript rending and performs convenient delivery. Users exploit it primarily for website change monitoring, fraud protection, and travel fare monitoring.

Each tool is a paid one. However, pricing options are adequate, with a minimum package starting from $49 monthly. Still, you can request a free trial for these services.

Key features:

- Extensive pool of 100 million IPs

- Integrated IP rotation

- JavaScript rendering

- Auto-scaling

- Enterprise-grade solutions

- Ready-to-use data

- Reliable proxy resources

Best suited for web scraping.

15. IPRoyal – Best for Social Networking

IPRoyal rates are tenth in the list of top web proxies for crawling. Its proxy pool is about to reach 8 million addresses of different server types: residential, datacenter, static, sneaker, private, and 4G mobile. Most often, IPRoyal comes in handy for social networking and covers five major social platforms where it can be applied. These are Facebook, Instagram, YouTube, Reddit, and Discord.

This service allows you to manually switch from a sticky session to a rotating one. During rotation, the platform changes the IP address with every sent request. On the contrary, a sticky session allows maintaining the same IP address for up to 24 hours. Choosing a rotating session that will keep you safe from blocks and restrictions is better for scraping issues. Among the tools provided here are:

- Google Chrome Proxy Manager;

- Firefox Proxy Manager;

- Proxy Tester.

Key features:

- Bandwidth savings and fastest speeds

- Internet usage control

- Improved security

Best suited for social networking.

16. NetNut – Best for Manual Session Control

NetNut offers a pool of over 52 million residential IP addresses available worldwide. This scraping proxy does not rely on a P2P network – its IPs are always online and ready for further usage. One of the most significant advantages of NetNut is manual session control. It means you can use the same IP for up to 30 minutes and change it independently – the session control is entirely in your hands.

For ease of summarizing current statistics, the platform offers Traffic Data Analysis and Reporting. If you want to test this service, a 7-day free trial will help you independently estimate the quality of NetNut service.

Key features:

- Over 52 million residential IPs

- Unlimited concurrent sessions

- Manual session control

- 7-day free trial

Best suited for manual session control.

Disclaimer:

The following four proxy services lack the features required for advanced web scraping and data crawling. That’s why we mention them as alternatives for the 16 mentioned above. These four are good choices if you are searching for a fallback platform.

1. ProxySale — Best for Speediest Scraping

ProxySale can offer access to the extensive proxy pool in almost any country. Enjoy dedicated proxies to help you increase speed, avoid captcha, multi-thread, and bypass blocking. Moreover, unlike other options, this one will not create problems while scraping with Google. Once you choose ProxySale for your needs, you can be sure that its team will help you to set it up and continue supporting you during the usage period.

Key features:

- Speed of 1 GBPS

- 24/7 Customer Support

- 100% money-back guarantee

- Compatibility with any platform or site

Best suited for increased scraping speed.

2. Shifter – Best for Bypassing Restrictions

Shifter offers over 50+ million proxies for scraping with worldwide coverage and ultra-low latencies. Concerning data extraction, you can use SERP API and Web Scraping API. Although Shifter has an extensive pool of proxy servers and is a good solution for parsing, it does not fit for working with Google and Amazon.

When scraping Google pages, you’ll have to get through an avalanche of captchas that hinder the workload. Anyway, there are a lot of ways to avoid them. As for Amazon, the company forbids using proxies unless you contact its support. Finally, the session control is limited here.

Key features:

- Over 50 million addresses

- Acceptable scraping

- Limited session control

Best suited for bypassing restrictions.

3. GeoSurf – Best for Scheduled Data Collection

GeoSurf is another web scraping proxy that stands out for precise location targeting and the possibility to select the frequency and timeline of parsing. It helps greatly when you need to extract a particular amount of money for a specific time. Besides, these proxies are stable and have a sticky session of up to 10 minutes.

Key features:

- High stability

- Browser extension

- Scheduled scraping

Best suited for scheduled data collection.

4. Rayobyte – Best for SEO Monitoring

Rayobyte (formerly Blazing SEO) is the last proxy crawler in our rating. It focuses mainly on residential proxies, but you can still get practically all types of intermediaries here. Rotating, static, residential, datacenter, mobile, and ISP proxies – everything is at your service.

Rayobyte suits well for all SEO purposes – from SEO monitoring to collecting SERP data on localized sources. Unfortunately, Rayobyte has the same problems with Amazon and Google as Shifter. Besides, the IP distribution is quite unpredictable, limiting your data-gathering opportunities.

Key features:

- End-to-end hardware control

- High availability

- 99% uptime

- Scraping robot

Best suited for SEO monitoring.

FAQ

Sure, you will find some providers offering their services for free, but they lack quality. These are unreliable services with plenty of malfunctions. Besides, your data will no longer be secure once you entrust your device to free services. Such a proxy for scraping might do more harm than good.

Websites have different limits set on the number of allowed requests. The average is 600 requests per hour and ten requests per minute. Say, you need to scrape 600,000 pages per hour. Hence, you need 1000 proxies for scraping. Nevertheless, it is essential to pay attention to scraping circumstances.

Let’s assume you need 1000 proxies to scrape 600,000 pages per hour. To avoid undesired blocking, you need to use them most efficiently. The proxy pool allows you to manage these 1000 proxies and is regulated by the proxy network.

Using this pool, you shift all the problems of choosing IPs and their rotation on the shoulders of the proxy network. Providers will give you a single entry point to all or only part of the proxies of the pool. As for pricing, it depends on bandwidth and ports.

We can’t say for sure which proxy scraper will be the best for parsing because the success rate of each service depends on website parameters. Nevertheless, choosing services that guarantee your privacy and are challenging to detect or block is better.

Don’t forget about the speed rate and level of security because they also play an essential role in choosing the best proxy scraper. We can confidently say that each platform we have reviewed is reliable and fit for parsing.

These proxies operate on the same principle as ordinary ones. It is a simple guide on how to set up a proxy scraper:

1. Find Wi-Fi parameters in the network settings.

2. Click on the Change Network button.

3. Enter Advanced Settings and select Proxy Server.

4. In the Proxy Server section, change configurations manually.

5. Save settings.

Final Words

Proxies for web scraping and crawling are used universally since they are the best tool for data gathering. A great variety of platforms specialize in proxy scrapers, but it is difficult to pick out the best one. These 15 reliable platforms have established themselves as high-quality services for advanced web scraping and crawling.

Other categories

Do you want to find out the up-to-date information, news, and expert feedback about modern proxy solutions? That all is at your fingertips: