Load Balancing

Load balancing is a pivotal technology in networking and computing that ensures smooth operation and optimal resource utilization across multiple servers. In this guide, I’ll speak of the mechanisms, algorithm options, and practical tips for effectively configuring this technology.

How Does Load Balancing Work?

This technology optimizes the distribution of incoming network or application traffic across a group of servers or endpoints. It ensures that no single system becomes overloaded, enhancing efficiency and improving response times.

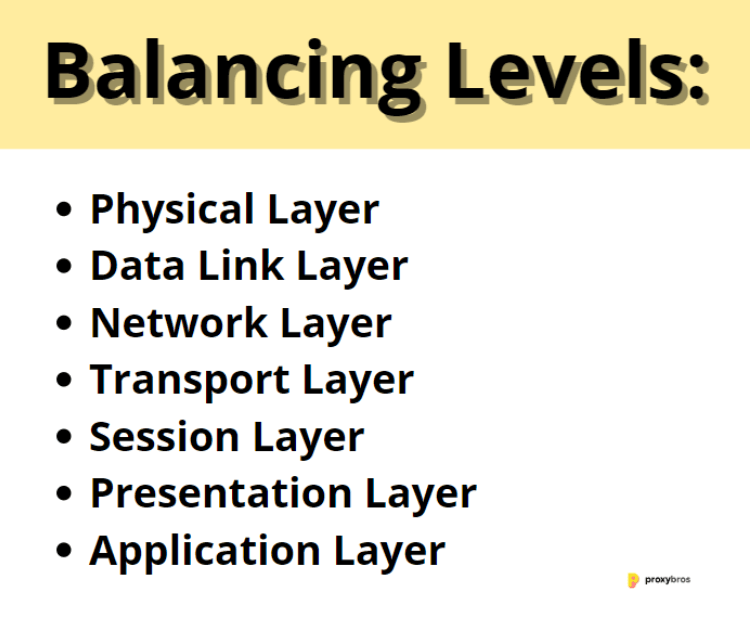

Balancing Levels — OSI Model

Physical Layer (Layer 1)

Data is transmitted through net infrastructure at the foundational physical layer. This physical transmission requires strong computers, cables, switches, and routers to remain stable.

Data Link Layer (Layer 2)

Server load balancing at this layer involves options based on MAC addresses, which are unique identifiers for net interfaces. This is especially relevant in local area networks (LANs), where this technology helps distribute traffic evenly across multiple computers.

Network Layer (Layer 3)

At the net layer, the networking architecture requires DNS load balancing, IP Anycast, and network load balancing. These options leverage IP routing to distribute user requests effectively, enhancing net connections’ scalability and reliability.

- DNS Load Balancing

DNS load balancing distributes requests across multiple computers by manipulating DNS responses. This method ensures a balanced traffic flow by directing users to different servers based on their geographic location or the current load on the servers.

- IP Anycast

IP Anycast requires a single IP address assigned to multiple servers in different locations. Routing protocols direct the requests to the nearest or least busy host, significantly reducing latency and improving traffic distribution.

Transport Layer (Layer 4)

Server load balancing at the transport layer uses TCP and UDP protocols. By using them, balancers efficiently manage session persistence, which is crucial for applications like online shopping, where session integrity is key. Let’s see the difference between the protocols: TCP vs UDP.

- TCP

TCP ensures reliable, ordered, and error-checked delivery of a stream of packets across the web. These options are essential for applications requiring precise data delivery.

- UDP

UDP, in contrast, is used for applications that require fast, efficient delivery, such as video broadcasting. Speed is more critical here than message order accuracy.

Session Layer (Layer 5)

At the session layer, balancers manage session states, ensuring sessions are maintained and can be recovered after interruptions. It is crucial for applications that require persistent user interaction.

Presentation Layer (Layer 6)

This layer translates data between the application layer and the network. Balancers ensure data is appropriately encrypted and formatted across different computing platforms.

Application Layer (Layer 7)

The application layer’s balancers are highly sophisticated and directly impact the end-user experience. The options are based on content type, cookies, user login status, and other HTTP header information.

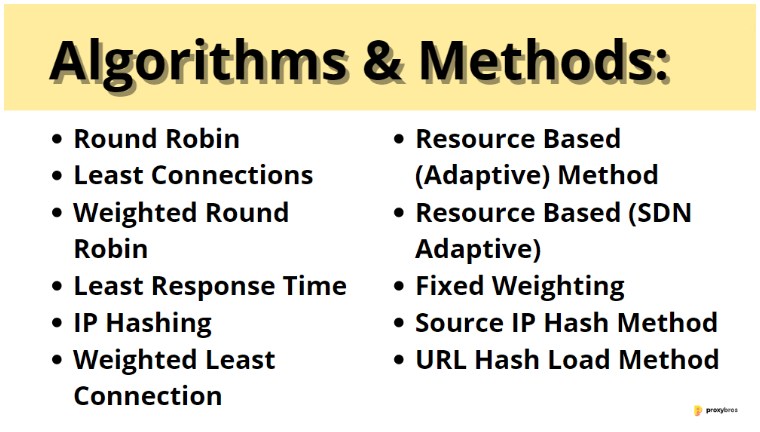

Load Balancing Algorithms & Methods

Round Robin

The Round Robin method is a straightforward technique that cycles through a list of servers in a pool, assigning each new request to the next server in line. It ensures a uniform traffic distribution, particularly effective in environments where all servers are of similar specifications and capacity. This technique is simple to implement and maintain, which makes it an ideal option for configuring the web.

Least Connections

The Least Connections method is a dynamic load balancing strategy that requires selecting the server with the fewest active connections to handle new requests. This approach is particularly beneficial for handling long-lived connections, such as those in streaming media or large file transfers. It helps prevent any single server from becoming a bottleneck by spreading the traffic to less busy servers.

Weighted Round Robin

The Weighted Round Robin is an enhanced version of the Round Robin. It assigns more requests to servers based on their capacity to handle larger loads. Each server is given a weight reflective of its processing power or current traffic. This determines the number of requests it receives relative to others in the pool. The Weighted Round Robin allows for more flexibility and efficiency, particularly when servers have differing capabilities.

Least Response Time

The Least Response Time method routes requests to the server with the lowest average response time to recent requests. This technique minimizes wait times, making it ideal for high-performance applications such as interactive websites, where speed is crucial.

IP Hashing

With IP Hashing, all requests from a specific IP address are consistently sent to the same host, maintaining session continuity. This is particularly important for applications where the user’s session data is stored locally on the server, such as in online shopping carts or user customization settings.

Weighted Least Connection

This approach extends the Least Connections method by considering each system’s capacity as well. It directs new connections to the least busy server relative to its ability to handle additional traffic. This technique is effective in environments where servers vary in performance and traffic handling capabilities.

Resource Based (Adaptive) Method

The Resource Based (Adaptive) technique dynamically adjusts to each system’s real-time conditions. It uses current server load data to inform routing decisions, redirecting traffic to servers under less strain. This adaptive approach is suitable for networks with fluctuating demand.

Resource Based (SDN Adaptive)

This method boosts traditional balancers using real-time data about web traffic flows and server health. It incorporates Software-Defined Networking (SDN) elements, dynamically adjusting routes to optimize network performance. It is one of the best options for complex environments with highly variable traffic patterns.

Fixed Weighting

Fixed Weighting assigns a set weight to each server in the pool based on its expected load-handling capabilities. Traffic is then distributed according to these predetermined weights, configuring a stable and predictable traffic balance. This approach best shows when traffic patterns and host capabilities are well understood and remain consistent over time.

Source IP Hash Method

This procedure directs all requests from a particular IP address to the same host. It is one of the best solutions for applications that require a user’s interaction to remain on a single server.

URL Hash Load Method

The URL Hash Load technique routes requests based on the URL’s hash value. Thus, all requests are directed to a specific URL and handled by the same server. This technique is particularly beneficial for websites where each page receives significantly higher traffic and requires high efficiency and speed.

Load Balancing and Proxy

Configuring a proxy to balance the load allows efficient traffic management across multiple servers. Proxies serve as intermediaries to distribute traffic and enhance security by acting as a firewall and filtering incoming data. They are particularly effective in complex net environments where balancing demand and security is paramount.

How Does Load Balancing Improve Scalability and Reliability in Distributed Systems?

Scalability

Load balancers enhance system scalability by distributing incoming traffic across multiple servers, ensuring no single one is overwhelmed. This allows for horizontal expansion, maintaining performance as demand increases. Scalability is crucial for businesses experiencing growth or seasonal spikes. It enables them to adjust resources dynamically and efficiently.

Reliability

Balancers boost reliability by directing traffic to operational servers and rerouting from failed ones, minimizing downtime and maintaining service availability. It is vital for critical sectors like finance, healthcare, and e-commerce to reduce the risk of system overloads and ensure continuous operation.

Tips for Implementing and Managing Load Balancers

- Assess Network Requirements

Thoroughly assess your net’s specific needs. Consider current traffic loads, the types of applications in use, and anticipated growth. This assessment will help determine the most appropriate method and technology to deploy.

- Choose the Right Load Balancer

Select a balancer based on your net’s size, complexity, and specific requirements. Consider whether a hardware or software load balancer or even a cloud service like Cloudflare best suits your needs.

- Plan for Future Scalability

Ensure that your solution can scale as your net grows. Adding more servers or resources should be easy without significant changes to the existing setup. This planning prevents potential bottlenecks and supports seamless growth.

- Implement Redundancy

To implement redundancy, configure multiple traffic balancers in a fail-safe mode to ensure there is no single point of failure. If one balancer fails, others can take over without disrupting the service.

- Regularly Monitor Performance

Continuously monitor your balancer’s performance to ensure it operates efficiently. Use monitoring tools to track metrics such as traffic distribution, server load, and uptime.

- Secure Your Balancer

Implement security measures such as firewalls, intrusion detection systems, and SSL offloading to protect against external threats. Regularly update and patch your balancers to protect against vulnerabilities.

- Test Load Balancer Regularly

Make sure your traffic balancer can handle expected traffic volumes and failover correctly. Testing helps verify that all balancer components are functioning as intended and can withstand peak loads and potential networking failures.

Conclusion

Load balancing is an essential strategy for enhancing networking services’ efficiency, reliability, and scalability. You can ensure optimal performance and user satisfaction by understanding and implementing appropriate balancing techniques and algorithms.

FAQ

Load balancing meaning encompasses a technology that distributes net or application traffic across multiple servers to ensure no single one becomes overwhelmed. It optimizes resource use and maximizes throughput.

These solutions are needed to enhance application reliability, improve user experience by reducing response times, and ensure system resilience against failures.

The Round Robin algorithm distributes client requests sequentially across all servers in the pool, ensuring a uniform traffic distribution.

Load balancers enhance net performance by efficiently distributing traffic across multiple servers. These solutions prevent any single server from becoming a bottleneck.

The Least Connections algorithm routes new requests to the server with the fewest active connections. It optimizes the workload distribution and improves response times.

Choose the load balancer based on the type of applications, system capacity, response times, session persistence needs, and overall web architecture.

Net load balancing distributes requests based on networking and transport layer information like IP addresses and TCP ports. Application-level balancing uses more complex data from user sessions, messages, and cookies.

In cloud computing, a balancer distributes workloads across multiple cloud resources like Cloudflare. It improves resource utilization and application performance while ensuring reliability and scalability.